Reality is consciousness, therefore is created by patterns of observation and how we interpret them throughout our lives. One of the ways we do this is body language. Body language develops naturally as we grow, associating the body movements of others with behavior patterns.

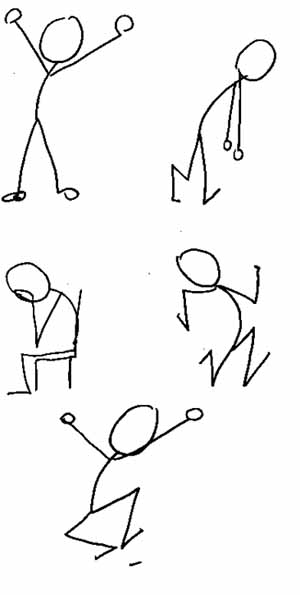

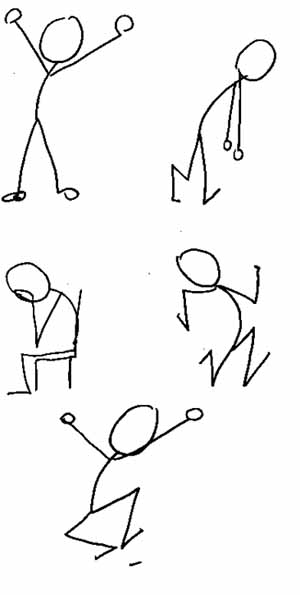

Body language is a form of non-verbal communication, which consists of body posture, gestures, facial expressions, and eye movements. Humans send and interpret such signals subconsciously. It is from the subconscious mind, the soul, that the true value of what we are doing here is shown.

Psychics, who often read people's thoughts, often incorporate the body language of their client within their reading.

The technique of "reading" people is commonly used in interviews and criminal investigations.

Mirroring the body language of someone else indicates that they are understood.

Like anything else, body language can be interpreted differently in each situation. For example, a stifled yawn can mean a person is either sleepy, or simply bored. A fidgety person, may have a health problem, have something else on their mind, or any other number of scenarios to explain their behavior.

Body language may provide clues as to the attitude or state of mind of a person. For example, it may indicate aggression, attentiveness, boredom, relaxed state, pleasure, amusement, and intoxication, among many other cues we easily recognize. Some body language immediately sends out waring signals for us to avoid the person, other body language signals something more positive - such as sexual flirting.

If you pay attention to how much you look at the body language of others, you will see how important it is for you in evaluating who should and should not be in your life.

To me, the message comes more from the other person's ability to make eye contact, that most other forms of subtle or overt body language.

Physical expressions like waving, pointing, touching and slouching are all forms of nonverbal communication. The study of body movement and expression is known as kinesics. Humans move their bodies when communicating because, as research has shown, it helps "ease the mental effort when communication is difficult." Physical expressions reveal many things about the person using them. For example, gestures can emphasize a point or relay a message, posture can reveal boredom or great interest, and touch can convey encouragement or caution.

One of the most basic and powerful body-language signals is when a person crosses his or her arms across the chest. This can indicate that a person is putting up an unconscious barrier between themselves and others. It can also indicate that the person's arms are cold, which would be clarified by rubbing the arms or huddling. When the overall situation is amicable, it can mean that a person is thinking deeply about what is being discussed. But in a serious or confrontational situation, it can mean that a person is expressing opposition. This is especially so if the person is leaning away from the speaker. A harsh or blank facial expression often indicates outright hostility.

Consistent eye contact can indicate that a person is thinking positively of what the speaker is saying. It can also mean that the other person doesn't trust the speaker enough to "take his eyes off" the speaker. Lack of eye contact can indicate negativity. On the other hand, individuals with anxiety disorders are often unable to make eye contact without discomfort.

Eye contact can also be a secondary and misleading gesture because cultural norms about it vary widely. If a person is looking at you, but is making the arms-across-chest signal, the eye contact could be indicative that something is bothering the person, and that he wants to talk about it. Or if while making direct eye contact, a person is fiddling with something, even while directly looking at you, it could indicate the attention is elsewhere. Also, there are three standard areas that a person will look which represent different states of being. If the person looks from one eye to the other then to the forehead, it is a sign that they are taking an authoritative position. If they move from one eye to the other then to the nose, that signals that they are engaging in what they consider to be a "level conversation" with neither party holding superiority. The last case is from one eye to the other and then down to the lips. This is a strong indication of romantic feelings.

Disbelief is often indicated by averted gaze, or by touching the ear or scratching the chin. When a person is not being convinced by what someone is saying, the attention invariably wanders, and the eyes will stare away for an extended period.

Boredom is indicated by the head tilting to one side, or by the eyes looking straight at the speaker but becoming slightly unfocused. A head tilt may also indicate a sore neck or Amblyopia, and unfocused eyes may indicate ocular problems in the listener.

Interest can be indicated through posture or extended eye contact, such as standing and listening properly.

Deceit or the act of withholding information can sometimes be indicated by touching the face during conversation. Excessive blinking is a well-known indicator of someone who is lying. Recently, evidence has surfaced that the absence of blinking can also represent lying as a more reliable factor than excessive blinking.

Some people use and understand body language differently, or not at all. Interpreting their gestures and facial expressions (or lack thereof) in the context of normal body language usually leads to misunderstandings and misinterpretations (especially if body language is given priority over spoken language). It should also be stated that people from different cultures can interpret body language in different ways.

Some researchers[ put the level of nonverbal communication as high as 80 percent of all communication when it could be at around 50-65 percent. Different studies have found differing amounts, with some studies showing that facial communication is believed 4.3 times more often than verbal meaning, and another finding that verbal communication in a flat tone is 4 times more likely to be understood than a pure facial expression.' Albert Mehrabian is noted for finding a 7%-38%-55% rule, supposedly denoting how much communication was conferred by words, tone, and body language. However he was only referring to cases of expressing feelings or attitudes.

Interpersonal space refers to the psychological "bubble" that we can imagine exists when someone is standing too close to us. Research has revealed that there are four different zones of interpersonal space. The first zone is called intimate distance and ranges from touching to about eighteen inches (46 cm) apart. Intimate distance is the space around us that we reserve for lovers, children, as well as close family members and friends.

The second zone is called personal distance and begins about an arm's length away; starting around eighteen inches (46 cm) from our person and ending about four feet (122 cm) away. We use personal distance in conversations with friends, to chat with associates, and in group discussions.

The third zone of interpersonal space is called social distance and is the area that ranges from four to eight feet (1.2 m - 2.4 m) away from you. Social distance is reserved for strangers, newly formed groups, and new acquaintances. The fourth identified zone of space is public distance and includes anything more than eight feet (2.4 m) away from you. This zone is used for speeches, lectures, and theater; essentially, public distance is that range reserved for larger audiences.

Recently, there has been huge interest in studying human behavioral clues that could be useful for developing an interactive and adaptive human-machine system. Unintentional human gestures such as making an eye rub, a chin rest, a lip touch, a nose itch, a head scratch, an ear scratch, crossing arms, and a finger lock have been found conveying some useful information in specific context. Some researchers have tried to extract such gestures in a specific context of educational applications. In poker games, such gestures are referred to as "tells" and are useful to players for detecting deception or behavioral patterns in an opponent.

Body language to be read by computers one of new innovative solutions PhysOrg - October 1, 2010

A consortium of European researchers thinks so, and has developed a range of innovative solutions from escalator safety to online marketing.

The keyboard and mouse are no longer the only means of communicating with computers. Modern consumer devices will respond to the touch of a finger and even the spoken word, but can we go further still? Can a computer learn to make sense of how we walk and stand, to understand our gestures and even to read our facial expressions? The EU-funded MIAUCE project set out to do just that.

The motivation of the project is to put humans in the loop of interaction between the computer and their environment, explains project coordinator Chaabane Djeraba, of CNRS in Lille. We would like to have a form of ambient intelligence where computers are completely hidden, he says. This means a multimodal interface so people can interact with their environment. The computer sees their behavior and then extracts information useful for the user. It is hard to imagine a world where hidden computers try to anticipate our needs, so the MIAUCE project has developed concrete prototypes of three kinds of applications.

Escalator Accidents

The first is to monitor the safety of crowds at busy places such as airports and shopping centres. Surveillance cameras are used to detect situations such as accidents on escalators. The background technology of this research is based on computer vision. We extract information from videos. This is the basic technology and technical method we use, says Djeraba.

It’s quite a challenge. First the video stream must be analyzed in real time to extract a hierarchy of three levels of features. At its lowest, this is a mathematical description of shapes, movements and flows. At the next level this basic description is interpreted in terms of crowd density, speed and direction. At the highest level the computer is able to decide when the activity becomes ‘abnormal’ perhaps because someone has fallen on an escalator and caused a pile-up that needs urgent intervention.

It is at the second level and the third semantic level of interpretation that MIAUCE has been most concerned. One of the MIAUCE partners is already working with a manufacturer of escalators to augment existing video monitoring systems at international airports where there may be hundreds of escalators. If a collapse can be detected automatically then the seconds saved in responding could save lives as well. But safety is only one possible kind of application where computers could read our body language.

Face swapping

A second could be in marketing, specifically to monitor how customers behave in shops. We would like to analyse how people walk around in a shop and the behavior of people in the shop, where they look, for example. The same partner is developing two products. One will be a ‘people counter’ to monitor pedestrian flows in the street outside a shop. It is expected to be particularly attractive to fashion stores who wish to attract passers-by.

Another is a ‘heat map generator’ to watch the movements of people inside the store, so that the manager can see which parts of the displays are attracting the most attention.

The third application addressed by MIAUCE is interactive web television, a technology of increasing interest where viewers can select what they want to see. As part of the project, the viewer’s webcam is used to monitor their face to see which part of the screen they are looking at.

It could be used to feed the user further information based on the evidence of what they have shown an interest in. Project partner Tilde, a software company in Latvia, is commercializing this application. MIAUCE has also developed a related technology of 'face swapping' in which the viewer's face can replace that of a model. This could be used for trying out hairstyles and clothing.

Ethics and anonymity

These are all ingenious applications but are there not ethical and legal worries about reading people’s behavior in this way? Djeraba acknowledges that the project team took such issues very seriously and several possible applications of their technology were ruled out on such grounds.

They worked to some basic rules, such as placing cameras only on private premises and always with a warning notice, but the fundamental principle was anonymity. They have to anonymize people. What they are doing here is analyzing user behavior without any identification, this is a fundamental requirement for such systems.

They also took account of whether the applications would be acceptable to society as a whole. No one would reasonably object to the monitoring of escalators, for example, if the aim was to improve public safety. But the technology must not identify individuals or even such characteristics as skin color.